Building a Data-Centric Platform for Generative AI and LLMs

Generative AI and large language models (LLMs) are revolutionizing many aspects of both developer and non-coder productivity with automation of repetitive tasks and fast generation of insights from large amounts of data. Snowflake users are already taking advantage of LLMs to build really cool apps with integrations to web-hosted LLM APIs using external functions, and using Streamlit as an interactive front end for LLM-powered apps such as AI plagiarism detection, AI assistant, and MathGPT.

When asked what trends are driving data and AI, I explained two broad themes: The first is seeing more models and algorithms getting productionized and rolled out in interactive ways to the end user. And second, with the power to be more pervasive than I can even imagine, is generative AI and LLMs. LLMs have the potential to help both developers and less-technically inclined users make sense of the world’s data.

LLM applicability is quickly evolving, and business and technical leaders are realizing that to make these technologies drive long-term impact, they need to move fast and leverage the latest models, which should be customized with internal data without compromising security. Protecting sensitive or proprietary data such as source code, PII, internal documents, wikis, code bases, and other sensitive data sets, along with prompts, used to contextualize the LLMs is particularly important.

From inception, Snowflake has had a clear focus on security and governance of data by bringing compute to the data as opposed to creating new copies and additional silos. This focus shaped our approach to Snowpark, and our approach to LLMs is no different. In doing so, without compromising security or governance, we enable customers and partners to bring the power of LLMs to the data to help achieve two things: make enterprises smarter about their data and enhance user productivity in secure and scalable ways.

Running LLMs in Snowflake to accelerate time to insights

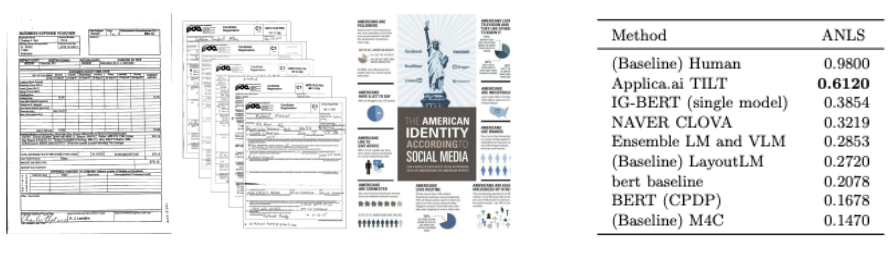

Unstructured data within documents, emails, web pages, images, and more is one of the fastest-growing data types, but there’s still no easy way to aggregate that data and perform analysis on it to derive valuable insights from it. To continue bringing to life the vision of the Snowflake Data Cloud to securely offer, discover, and consume all types of governed data, we completed the acquisition of Applica, winner of the Document Visual Question Answering Challenge with an innovative TILT (Text-Image-Layout-Transformer) model—a purpose-built, multi-modal LLM for document intelligence.

To make unstructured data more functional, we continue to put the preview integration of Applica’s groundbreaking LLM technology in the hands of customers to minimize the amount of manual document labeling and annotation that organizations need to fine-tune models specific to their documents.

Applica is just one example of many leading LLMs that customers can run inside of Snowflake in a secure and compliant way. Stay tuned for more news in this area at our Summit in Vegas.

A wave of ways to make more users more productive

As more companies center their growth opportunities around digital strategies and the number of developers continues to grow, LLMs provide an opportunity to increase their productivity by reducing the need for manual coding of repetitive or boilerplate tasks—an opportunity Snowflake developers will not be excluded from. To find the data you need and derive insights in the shortest time, we are developing code autocomplete, text-to-code, and text-to-visualizations in Snowflake worksheets for both SQL and Python. And to maximize the productivity of Snowflake customers, we are also developing LLM-powered search experiences across all of Snowflake from our documentation to Snowflake Marketplace.

Usage of LLMs by the vast majority of non-coding employees within a company can generate much greater efficiencies in the organization. A common barrier to AI adoption for non-coding business teams has been the complexity of AI. The use of LLMs was no different until chatGPT showed the world how, through an interactive application, anyone could leverage the power of these models. This gap between LLMs and the people that can use them, including non-technical colleagues, is one that Streamlit is designed to bridge. In as few as 25 lines of code, anyone can build an application that provides an interface for better guided prompting and interaction with LLMs, such as the GPT3 dataset generator.

And as we continue to integrate Streamlit to run natively in Snowflake, the experience of both building and deploying apps powered by any LLM will become more secure, scalable, and enjoyable.

Bringing the power of LLMs to the data

Snowflake is quickly putting built-in functionality to distill insights from unstructured data, LLM-powered user experiences, and infrastructure for leading LLMs and their front-end applications directly into the hands of customers. To achieve all this, we continue to build with a clear focus on the security and governance of data by bringing the work—in this case the LLMs—to the data.